Translation quality, accuracy, and fluency: what can you expect from KUDO AI? Here’s the outcome of our tests, and the methodology behind it.

In our recent A-Z guide to AI translation quality, our CTO and world-leading language technology expert concluded that, as of today, we can only gauge an approximative ‘score’ for the components of machine translation quality. And he meant it. Since there is no universally accepted definition of ‘translation quality’, nor is there a universally accepted way to assess it.

So, when it came to providing our clients with an objective answer to the common question of ‘what quality and accuracy can I expect from KUDO AI?‘, we had to get a little creative. Here’s the outcome, and here’s how we did it.

Testing AI translation quality

The three pillars of translation quality are accuracy, fluency, and latency, so to have something close to a quality ‘score’, we needed to assess these three areas independently.

Parameters: a blind evaluation of the output of our Speech Translator on a random, diverse corpus of speeches (corporate and technical presentations, political speeches, scientific talks).

Testers: for the fairest evaluation possible, we called on three categories of testers—linguists (including language interpreters), bilingual people, and end users (our clients).

Scoring: we asked individuals to evaluate KUDO AI on a LIKERT scale from 0-5 for each sentence, from the perspective of fluency and accuracy. For latency—the only aspect of translation quality that can be objectively measured based on the seconds or words of delay between a person speaking and the translation starting—we let machines do the counting.

Fluency and accuracy results

Fluency: 4.1/5

Accuracy: 4.25/5

Again, these constitute the average scores from the collated results we received from linguists, bilingual users, and clients in May of this year. The tests were subjective, of course, but that’s the reality of language interpretation—by and large, ‘quality’ is in the ear of the beholder.

Head to our AI quality guide for more in-depth information.

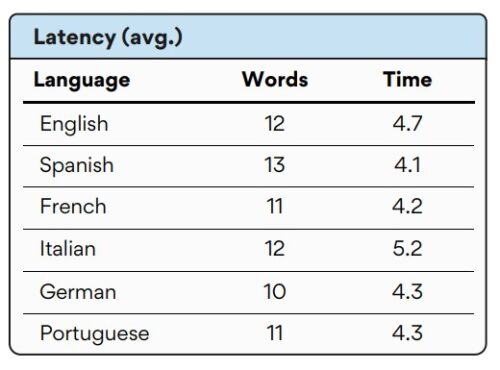

Latency results

This one is simpler. Latency is the measure of the lag between one person speaking and the listener(s) beginning to hear their translated voice—essentially, how long you have to wait to hear the translation. And this can be measured in number of words or seconds.

For this test, we measured both, for an average score of:

Lag in seconds: 4.1 seconds

Lag in number of words: 9.3 words

For more information on what latency is and what causes it, we recommend checking out our AI quality guide.

Evaluating KUDO AI Quality: final thoughts

So, what do these results mean? Well, firstly, as an industry benchmark, I think we can say that we’re pretty proud of how KUDO AI performed.

Nevertheless, any translation quality test needs to be taken with a pinch of salt; we will never stop repeating the fact that translation quality is subjective and notoriously difficult to measure, added to which our AI Speech Translator has undergone so many updates since these tests took place that we would likely score higher in all three categories if we underwent a new round of testing. And you can bet that we have many more upgrades on their way.

Our advice is therefore the following: if you’re thinking of implementing an AI speech translation system—or are simply curious to try one—we invite you to test KUDO AI yourself and make your own mind up. Ultimately, the quality of any solution is tied to the particular use case for which you need it, so the easiest way to determine that is by running a real-world test. And as it happens, we offer a no-obligation free trial of KUDO AI.

Want to test KUDO AI Speech Translator?

Interested in carrying out your own AI quality assessment? Get in touch with our team to request a demo.