How accurate and reliable are AI speech translators? What is the quality of human vs AI interpretation? Here’s everything you need to know.

We’re going to level with you; this blog post looked very different when it was first drafted by our CTO. And we’ll follow up this admission with a learning: don’t ask one of the world’s leading experts in speech-to-speech translation to answer a complex technical question if you’re not prepared for a complex technical answer.

So, how ‘good’ is AI speech translation today? More and more companies are investing in this technology, but by what metrics can you measure quality? And how does it compare with human interpretation, or between two AI providers?

Well, if you speak ‘CTO’ yourself, we recommend jumping straight to the bottom of this post to request our downloadable guide to AI speech translation quality, which includes templates for evaluating real-time machine translation systems yourself.

For those who prefer their technical information with a side of simplicity, however, read on. (And before you go any further, we’re not talking about AI-translated voice dubbing here—live, conversational translation is another ball game entirely).

Is AI speech translation accurate and reliable at this early stage?

If you’re searching for a definitive percentage or otherwise, we’re going to have to disappoint you; (1) There is no universally accepted definition of ‘translation quality’ that exists, and (2) numerous variables influence how users perceive it. The real challenge is fact working out how to evaluate the data. And that’s where we can help.

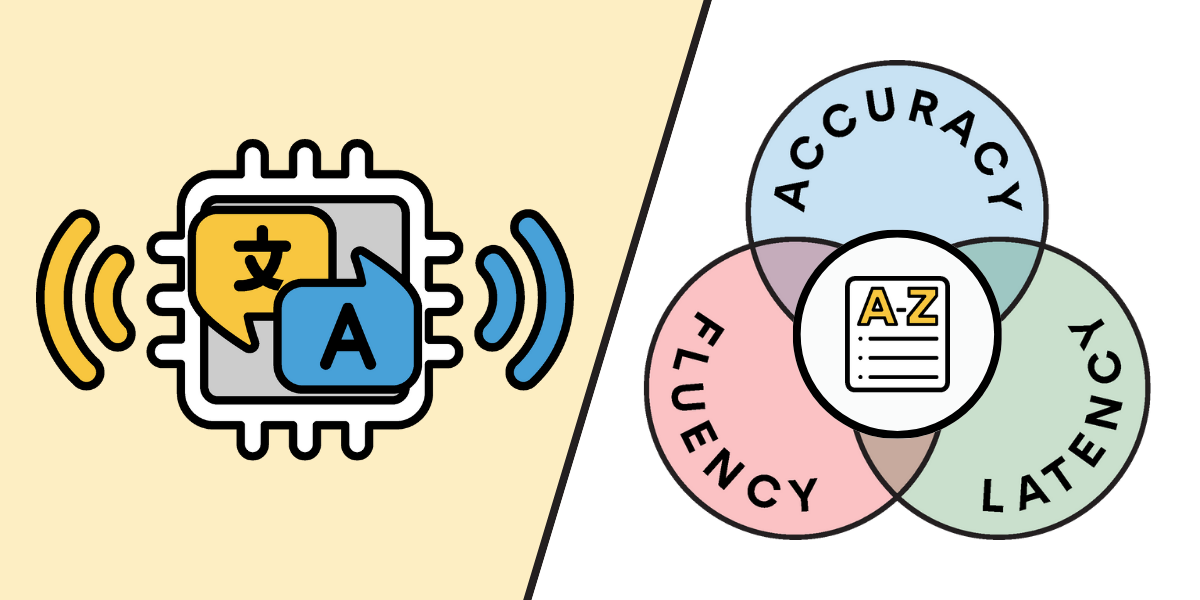

First, let’s break down how you measure ‘translation quality’ – and this is true of both human and AI:

Accuracy and fluency in AI speech translation – the verdict:

If we’re measuring speech translation against these two KPIs alone, AI translation would get something along the lines of an A- score (with an A+ being as close to a native speaker as possible).

This is thanks to the growing sophistication of text-to-text machine translators like Microsoft Azure, Google Translate, and DeepL, which make up some of the major building blocks of speech-to-speech architecture. But there’s a third component of quality which might determine user experience more than anything else…

What is latency?

Latency in translation refers to the lag between you speaking and your listener hearing your translated voice. In technical terms, it’s the duration—in seconds—between the moment a word is uttered in the original language to when the corresponding translation is available. This can also be measured by the count of words that the listener has to wait before getting the translation.

What causes latency?

A combination of you, your device, and the AI translator. Technically, the processing speed of all the AI components involved and even internet connection play a significant role. Linguistically, the system may require a specific context—i.e. a number of words—to produce a coherent translation. The context depends on language combination, or how a person talks.

Latency in AI speech translation – the verdict:

At this stage in the development of AI technology, we would give latency a conservative B score overall, although this very much differs from solution to solution. More importantly, the extent to which you feel lag in an AI speech translator depends on your meeting or event format; lag is going to be much less in one-way communication (e.g. a live event or presentation) compared with a multi-way conversation. Or, at least, that’s the case today.

Bear in mind that latency is not specific to machine translation; even human interpreters need several seconds to gauge what you’re saying before starting to translate it. Either way, it stands to reason that a good AI speech translator needs to have the lowest latency possible without impacting quality, in order for the conversation to flow in real time.

AI translation providers are busy working on this balance, but expectations should reflect where we’re at in the development of this technology. Progress in the last six months has surpassed all predictions—in one upgrade alone, our team increased translation accuracy in the KUDO AI engine by 84%—but Rome wasn’t built in a day.

See the outcome of KUDO AI’s performance review on accuracy, fluency, and latency for more in-depth results.

Our assessment: where does AI translation quality land overall?

We get it—taking a chance on a new technology can be daunting. It’s natural to want proof of quality before testing an AI speech translator in a real meeting or event.

Unfortunately, “how good is AI” is as sweeping a question to unpack as “how fast is a car?” If we absolutely had to give an approximative grade for the technology as a whole, we’d go with something like a B+ depending on the use case, language, speaker, and most importantly, the provider. But again, you’ll have to take this grade with a mammoth pinch of salt.

With that in mind, what we can offer, instead, is an overview of the following:

1. What is the quality of KUDO AI?

We’d be remiss not to include KUDO AI in this discussion, given that quality is the number one factor guiding our product development. So, here we go:

- We have nearly 4,000 users and a 4.1/5* user rating.

- Some of the world’s biggest cosmetics brands, NGOs, hotel chains, political organizations, and government entities regularly use our Speech Translator.

- We scored 4.1/5 for fluency and 4.25/5 for accuracy in a comprehensive series of blind user tests carried out by linguists in May this year.

2. Is AI as good as human interpretation?

The good news: as providers of both an AI Speech Translator and human interpretation, we’re the most objective people to address which is ‘better’. The bad news: we would have to double the length of this blog post to do so. And the conclusion will be something you might not want to hear: the two are incomparable; just as you can’t definitively measure the quality of AI speech translators, you can’t measure it for human interpretation. On the whole, pitching the two solutions against each other is the equivalent of saying that all cooks are the same, when in reality, you’re comparing apples and oranges. In other words, not a legitimate comparison.

On the whole, AI gives you the flexibility of an on-demand solution 24/7, the ability to customize translation based on subject matter, and more accessible pricing. Human interpreters still reign strong on carrying over nuanced conversation, emotion, and the more pleasant aural experience of hearing a less robotic voice.

We advise against seeing this as an either/or comparison and consider what you specifically need language support for. Nevertheless, if you do want to dive further into the topic, we recommend reading our CEO’s take on human interpreters vs AI.

3. Is AI speech translation ‘good enough’ yet?

Is AI speech translation perfect for all use cases today? No. Is the quality high enough to be used to make live meetings and events more accessible and engaging to international participants? We can only speak on behalf of our own Speech Translator, but the answer is “yes, absolutely”. And we’re basing this on the growing number of clients using KUDO AI.

If it helps, we can share that the use cases for which we receive the best feedback regarding quality are the following:

- Webinars

- Presentations

- All Hands meetings

- Training programs

- Product launches

- Marketing events

Looking for something more in-depth? 👇

Want to carry out your own AI quality assessment?

Interested in carrying out your own AI quality assessment? Get in touch with our team to request a demo.